Published on Apr 02, 2024

The way of interaction between human and robot has been developing using various technologies. Controlling the robotic vehicle using gestures is a predominant way of enhancing the interaction. In this interactive technique the user need not have any physical contact with the device. It helps to bridge the technological gap in the interactive system. In this project the robotic vehicle is directed by identifying the real time gesture commands from the user which is implemented using image processing algorithms and embedded techniques.

Human gesture enhances human-robot interaction by making it independent from input devices. Using gestures provides a more natural way of controlling and provides a rich and intuitive form of interaction with the robot. The main purpose of gesture recognition research is to identify a particular human gesture and convey information to the user pertaining to individual gesture. From the corpus of gestures, specific gesture of interest can be identified and on the basis of that, specific command for execution of action can be given to robotic system.

A prominent benefit is that it presents a natural way to send information to the robot such as forward, backward, left and right movements etc. In order to ease a feasible solution for user friendly interface, user can give commands to a wireless robot using hand gestures. The early device was mainly for navigation and controlling robot without any natural medium . This paper deals with interface of robots through gesture controlled technique but far away from the user. This can be achieved through image processing technique. Hand Gesture Recognition technology is implemented using „Data Gloves‟ which in turn leads to additional cost and lack of availability among majority of masses.

Webcam is an easily available device and today every laptop has an integrated webcam along with it. In this project, hand gesture recognizer which is capable of detecting a moving hand with its gesture in webcam frames is implemented. In future, it may be considered that willing to be more natural and more comforted. Command is generated at the control station and sent to the robot through zigbee within the zigbee range. The robot moves in the specified direction according to the specified command.

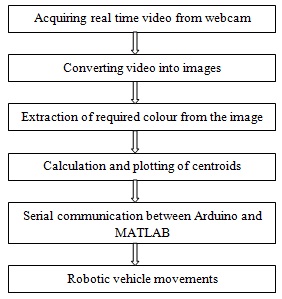

Inbuilt webcam of the system is used to capture the real time video of gestures. Color markers are used to interpret the gesture movements. Figure.1 shows the basic flow of the system. To transmit the gesture commands wirelessly zigbee series2 module is used [6]. Zigbee coordinator is connected to the serial port of the system through USB Explorer board. Zigbee router is mounted on the voltage regulator to regulate the voltage to 3.3V. In order to control the robotic vehicle arduino board is used

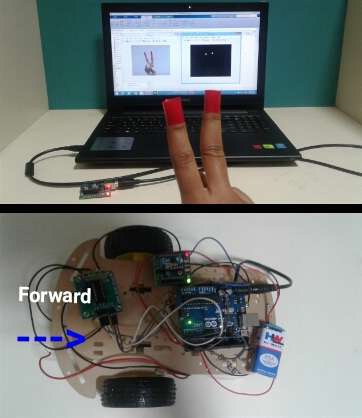

The user wears colored tapes to provide input gestures to the system. The inbuilt webcam of the computer is used as a sensor to detect the hand movements of the user. It captures the real time video at a fixed frame rate and resolution which is determined by the hardware of the camera

Color plays an important role in image processing. Each image is composed of an array of M*N pixels with M rows and N columns of pixels [9]. Each pixel contains a certain value for red, green and blue. Based on the threshold values RGB colors can be differentiated [10]. In color detection process the required color can be detected from an image.

Gesture recognition can be seen as a way for computers to begin to understand human body language, thus building a richer bridge between machines and humans than primitive text user interfaces or even GUIs (Graphical User Interface). Gesture recognition enables humans to interface with the robot and interact naturally without any mechanical devices. In gesture recognition based on the color detected and its position it is interpreted into gestures [13]. Based on the corresponding gesture, robotic movement is done.

In order to control the robotic vehicle according to the input gestures given by the user arduino uno board is used [14]. Serial communication between arduino and matlab is established wirelessly through zigbee

The real time video is given by the user. The video is sliced into images at a particular frame rate [16]. In order to obtain the intensity information, the acquired image is converted into grayscale image.

RGB image is converted into grayscale image. It converts the true color image RGB to the grayscale image. A grayscale digital image is an image in which the value of each pixel is a single sample, that is, it carries only intensity information. As compared to a colored image, computational complexity is reduced in a gray scale image. All the necessary operations were performed after converting the image into gray scale.

From the RGB image the required colour (red) is extracted by subtracting the image. The red, green and blue color object is detected by subtracting the RGB suppressed channel from the grayscale image. This creates an image which contains the detected object as a patch of gray surrounded by black space. The conversion to binary is required to find the properties of a monochromatic image.

The gray region of the image obtained after subtraction needs to be converted to a binary image for finding the region of the detected object. A grayscale image consists of a matrix containing the values of each pixel. Image thresholding is a simple, yet effective, way of partitioning an image into a foreground and background. Thus the resultant image obtained is a monochromatic image consisting of only black and white colors. The conversion to binary is required to find the properties of a monochromatic image.

For the user to control the mouse pointer it is necessary to determine a point whose coordinates can be sent to the cursor. With these coordinates, the system can perform robotic movements. The centroid is calculated for the detected region. The output of function is a matrix consisting of the X (horizontal) and Y (vertical) coordinates of the centroid.

The number of centroids is transmitted to zigbee coordinator via COM port of the system. zigbee router present in the wireless network receives data from coordinator and it transmits to the arduino.

The arduino transmits command to robotic vehicle. Based on the commands appropriate movements like forward, reverse, turning left and right are performed.

This system is developed in the software MATLAB R2015a under Windows 8.1 operating platform. In the above software the toolboxes used are Image Acquisition Toolbox, Image Processing Toolbox, and Instrument Control Toolbox. XCTU software is used for configuring zigbee as zigbee coordinator and zigbee router. In order to control the robotic vehicle arduino uno is used. The real time gesture is acquired through webcam of the system.Figure.2 shows different real time gesture inputs and its corresponding gray scale image, subtracted image, binary image, centroid plot. According to the number of centroids, different robotic movements are performed. If the number of centroid is one, the vehicle moves leftward. If the number of centroid is two, it moves forward. If the number of centroid is three, it moves rightward. If the number of centroid is four, the vehicle moves backward. Figure.3 shows the robotic movement based on the input gesture.

The gesture controlled robot system gives an alternative way of controlling robots. It makes the user to control smart environment by hand gesture interface. This gesture recognition technique demonstrates the use of MATLAB image processing tools to detect and count the number of centroids of gestural image. Since this technique makes use of gesture as input transmitted through zigbee, it improves the operating range of robot. It eliminates the use of external hardware supports like remote control in navigation of robot in different direction and reduces the human efforts in risky environments.

[1] Mukti Yadav, Mrinal Yadav and Hemant Sapra , “MATLAB based Gesture Controlled Robot”, Journal of Basic and Applied Engineering Research ,Vol3, Issue 2; January-March, 2016.

[2] AdityaPurkayastha, Akhil Devi Prasad, Arunav Bora, Akshaykumar Gupta, Pankaj Singh, “Hand Gestures Controlled Robotic Arm”, Journal of International Academic Research For Multidisciplinary, Vol2, Issue-4, May 2014.

[3] Daggu Venkateshwar Rao, Shruti Patil, Naveen Anne Babu and V.Muthukumar,“Implementation and Evaluation of Image Processing Algorithms on ReconfigurableArchitecture using Cbased Hardware Descriptive Languages”, International Journal of Theoretical and Applied Computer Sciences, Vol1 pp. 9–34, 2006

| Are you interested in this topic.Then visit the below page to get the full report |